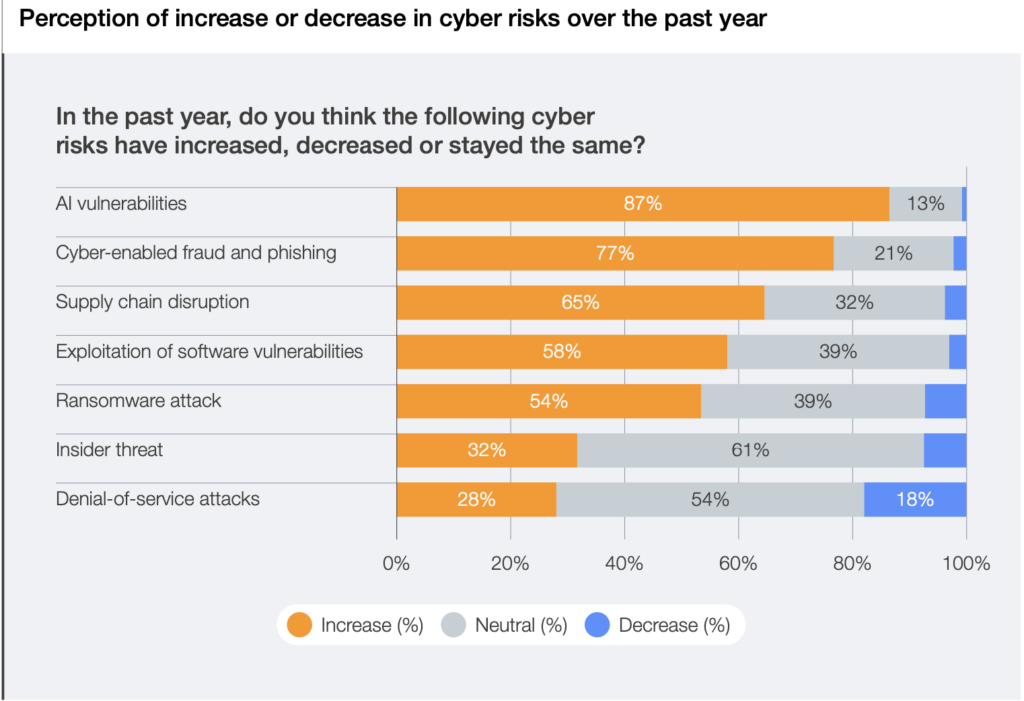

In the digital landscape of 2026, learning how to protect yourself from AI is a critical necessity. According to the Global Cybersecurity Outlook 2026, AI is the most significant driver of change in the year ahead, cited by 94% of leaders.However, with 87% identifying AI-related vulnerabilities as the fastest-growing cyber risk, we must take immediate action to stay safe while using AI

Understanding AI Dangers and Deepfakes

The most pervasive AI dangers today involve the weaponization of generative models to create deepfakes. Threat actors are leveraging AI to enhance the scale, speed, and sophistication of social engineering attacks.

- Deepfake Scams: Attackers use generative AI to produce realistic phishing emails, deepfake audio, and video capable of evading human scrutiny.

- Personal Impact: 73% of individuals reported being personally affected by cyber-enabled fraud in the past year.

- The Global Response: To ensure generative AI content is safe, organizations are shifting toward continuous verification and audit trails grounded in zero-trust principles.

How to Stay Safe Using AI Tools like 365 Copilot

Many users ask what is 365 Copilot and how to use it securely. Microsoft 365 Copilot is an AI assistant that helps automate tasks; however, it introduces new risks regarding data leaks.

How to stay safe while using AI agents:

- Human-in-the-Loop: 41% of organizations mandate that AI-generated responses must be validated by a human before implementation.

- Governance Frameworks: AI can improve cybersecurity only when deployed within sound governance that keeps human judgment at the center.

- Zero-Trust Principles: Treat every interaction with an AI agent as untrusted by default, ensuring continuous verification.

AI-Generated Media Protection: Safeguarding Your Content

A major concern in 2026 is how to protect images from AI scrapers and avoid AI-generated content from stolen sources.

- Data Leaks: Data leaks associated with genAI are now a leading concern for 34% of organizations, marking a striking pivot from previous years.

- Website Security Check: Perform a professional URL Scan on any site asking for your media. Modern scammers use “Shadow AI” to create ephemeral, hyper-realistic websites that bypass traditional filters.

- Neural Artifacts: Use forensic tools to check for synthetic patterns in videos. This is one of the best ways to reveal if the safe contents revealed in video are actually authentic.

Practical Ways to Protect Yourself from AI Scams

Specifically, how can we protect ourselves from AI on a daily basis?

- Verify Before You Click: 64% of organizations now assess the security of AI tools before deploying them – you should do the same with every link you receive.

- Close the Skills Gap: 54% of respondents cite insufficient knowledge as a primary hurdle to AI safety. Invest in your own AI literacy.

- Use UncovAI: Our forensic engine helps you stay safe while using AI by detecting neural artifacts and AI-phishing sites in under 3 seconds.

In conclusion, your digital safety depends on moving from awareness to action. Ultimately, using a forensic URL Scan and staying informed about AI dangers are the only ways to restore the truth in 2026.

Stop the fakes. Restore the truth. 👇

[👉 Get the UncovAI WhatsApp Bot]

[👉 Install UncovAI for Firefox & Chrome]